Technological and social undercurrents

No major technological revolution happens from one advancement alone and it is often when a few technological changes converge that a transcendent moment of advancement breaks through. Personal computers combined together better interface technologies, substantive processing power and a complex networking framework to enable a massive jump in human productivity and wealth. Powerful economic monitors like the World Economic Forum have grouped together different technological advancements by decades and centuries to help people understand the broader undercurrent that connected the changes. The unifying objective with each age and advancement has been to improve human capability and power, often applying new technologies in markets to increase wealth and standards of living.

On the whole, this has been good for humanity, with some very clear considerations. We have a higher standard of living globally than we did before the first industrial age began in the late 1700s. We also have the capacity to effectively end the world with nuclear armaments and are slowly degrading the living conditions of our planet through industrialized agriculture and carbon-based fuels. Our population has never been at greater risk to global pandemics due to the high-density urban populations and frequent international travel. The complicated economic, social and political systems that we have developed over generations could lead to disastrous global outcomes if not properly managed and continuously redirected as we learn more about the outcomes of these systems. The challenge that humanity has faced in each age is to decide on how best to direct the technological advancements and each age has presented advancements at a faster and faster pace with strong complementarity between the changes.

The current technological age that we are in is characterized by the increasing connectivity of all devices and the improving powers of the processing systems that these devices can access, either in themselves or other environments. Seeing the patterns behind teams and projects reviewed in Successful Applications gives a fair perspective and combining it with tangential patterns that are going to impact teams soon will help to inform a more complete map of the future. This piece will review the different patterns of technological advancement that are important to Machine and Deep Learning, placing them in their appropriate context and simplifying the impacts of these advancements to the parts that relate specifically to ML and DL.

| This segment will cover: | |

|---|---|

| 1 | Neuroscience |

| 2 | Quantum Processors |

| 3 | Digital Regulations |

| 4 | Data Lakes |

| 5 | Internet of Things |

| 6 | Social engineering |

| 7 | Open source code |

| 8 | Space travel |

Neuroscience

The ability for machines to learn in large part is based on the study of how humans have been able to learn. Yes, there is a very different context in how our neural systems function as machine systems process data and can make decisions much more quickly while human systems are able to respond to new situations and interpret data in more flexible ways to better handle unforeseen scenarios. There will always be exceptions to this general truism though it holds true in most scenarios. Human brains have organized themselves to distribute processing power through a series of interconnected units and has groups of these units that specialize in processing certain functions that are regularly encountered by our brain. The occipital lobe is the unit heavily utilized for processing visual input gathered through the eyes and optic nerve, transmitting the processed information to visual cortex.

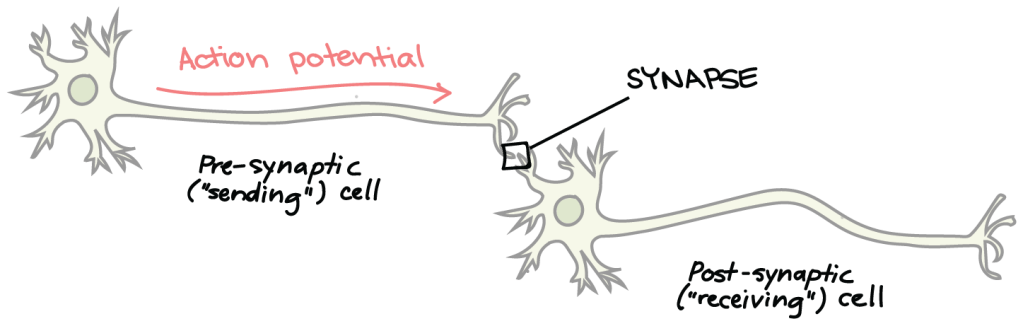

On a macro level, teams have developed a great understanding of how the brain has organized itself with a good sense of how certain functions and units have evolved over time. On a micro level, scientists have been able to view and understand how information is communicated between neurons via synapses through electrical and chemical processes to allow our brain to coordinate enough processing power to complete complicated functions. The diagram below covers the basic method that neurons use to transmit information to one another through the action potential within each neuron.

There are greater complexities within our brains that allows us to process information in many different ways, one example being how our different hemispheres process information in serial or parallel functions. Scientists and medical leaders have developed many methods for scanning and understanding the brain with new methods being developed. Unfortunately, they had hit a bit of a speed bump. The advanced techniques that researchers are using to map neural connections are incredibly time consuming and cannot be scaled to map the entire mind in a time and resource efficient fashion. The macro techniques that can map the entire mind quickly are not sufficiently granular to reveal the level of detail needed for to arm researchers with new insights on how the human mind operates.

The level of detail that many researchers are targeting right now is the connectome where all the neural connections of a mind are mapped. Researchers have been able to do this with smaller minds like those of mice, but scaling this to the human mind will take time. In the same way that mapping the human genome enabled a new era of gene-based medicines and may open the field of eugenics, the hope is that creating a connectome of the human mind will open other futurist technologies. Some key ideas include transmitting information directly to the human mind, copying human identities to computers, curing human brain conditions or enhancing the capabilities of human minds with new or improved components. These different directions have huge implications for deep learning where data scientists and engineers seeks to replicate the capabilities of human learning in computer systems – these teams are often inspired by the advances of their medical colleagues and often seek to collaborate with them.

If we place the machines we can create now on an evolutionary scale relative to our own evolution, we can create very simple organisms like worms or bugs. Advancements in the understanding of the human mind will greatly accelerate the evolution of machine systems though these impacts will likely be seen over a matter of decades, rather than years. Currently, scientists are using one of the most advanced supercomputers in the world to understand the connectome of a mouse. New methods, importantly ones focused on mesoscale mapping, are being developed that will accelerate this work for mammalian and other complicated neural structures, as will advancements in computer processing capabilities. It seems clear that scientists and researchers are some ways off from understanding the complexity of a human mind to a detailed enough level to replicate the breadth and depth of all its functions in machine systems.

Quantum Processors

Quantum is a subject or idea that is appearing in many popular films to explain away complicated or sticky aspects of the plot through deus ex machina. How it is presented in media is a magical concept that allows for time travel, teleportation, matter reduction and other amazing capabilities. While the reality of what is possible is not really on this scale, the implications of the discovery related to computer processors is quite substantial. Quantum refers to the ability to measure a physical entity and Quantum Mechanics or Physics refers to the properties and measurable patterns on an atomic scale. What is fascinating is that on this scale, the rules of matter that we are accustomed to begin to change. A state of being of uncertainty is introduced at this scale of reality to allow a state that is both there and not there.

Quantum processing, in terms of impact, is the change from binary bits to tertiary qubits in the base machine language that computer systems use to connect with their hardware. If currently, the base machine code that all of the computer systems built and in use today is a combination of 0s and 1s to represent information in algorithms, systems with quantum computing will have a third integer that represents the uncertainty of being both 0 and 1. The inclusion of this third integer on a system and processing level is a massive change. The machine code, assembly and higher order programming languages in use now, have been developed over time based on common bit language characteristics. These different languages are able to communicate and transmit information between other languages that are Turing-complete and have formed an interlocking network of systems throughout the internet. New quantum-based, qubit languages will have to develop over time with the new qubit machine code and assembly languages to best take advantage of the new processors capabilities.

In computational complexity theory, scientists and researchers have grouped algorithmic problems into different classes based off the complexity of the problems. Current digital processors are able handle problems in polynomial time harkening back to the ability for silicon circuits to handle computations at a rate of time father than we experience as humans. Quantum computers in development will be able to handle a new class of problems in nondeterministic polynomial (NP) time. Researchers do not expect that quantum computing will suddenly make all computational problems solvable, indeed a subclass of NP problems called NP-complete in bioinformatics, genetics, chemiformatics, social engineering and other fields will remain unassailable.

There are two benefits that are theorized to come from this, which motivates this titanic system shift. First, all calculations and processing functions will be faster relative to binary systems. The inclusion of a third integer makes the algorithms designed and run on these systems inherently faster at processing information than their digital equivalents. Second, the Quantum Processing Unit (QPU) will be able to preform functions that binary processing units like CPUs, GPUs or TPUs are unable to. These new functions include code breaking, new encryption methods and using quantum decoherence to ‘teleport’ information from sender to receiver. These two changes represent the quantum advantage and quantum supremacy, respectively.

That quantum computers are able to do things that digital computers cannot raises an interesting question about the future of digital computers and devices, which is best considered in the context of analog technologies. The difference between digital and analog is the signal used – digital uses discrete 0s and 1s to communicate while analog is able to capture negative and positive values along a variable scale. We still use analog in devices for signal capture like camera components and microphones and for signal transmission like radio or headphones. Quantum computers will appear in the cloud first with capacity reserved for big problems that have large research and development budgets and then, over decades, it will become more commercially available with digital technologies focused on problems better suited for its design.

In the world of Machine Learning, quantum computers will enable teams to approach larger and more complex datasets, most significantly in deep learning models to greatly advance the field, once teams figure out how to apply the technology correctly. At the TensorFlow 2020 Summit, Google announced their open source library for researchers to use as they apply Quantum ML Models and in 2019, announced that they had achieved quantum supremacy based off project results developed in collaboration with NASA and disputed by IBM. Microsoft, Rigetti Computing and other teams are all also working hard to develop processors and languages that make quantum computing more accessible. Quantum computing as a field was pioneered by Richard Feynman in the 20th century, giving theorists and mathematicians lots of time to understand how quantum mechanics will impact informatics. The change will be profound, it will take time and it is currently being led by researchers and scientists.

Digital Regulations

A key aspect of each industrial age is how people and societies decide to apply these advancements. If we take 1784 as the start date for the first industrial revolution, it took the United Kingdom some 70 years to regulate the labour rules to prevent the gross exploitation and death of children sent to work in mines and factories. Mass production in the second industrial revolutions created the armaments needed for global wars and the mechanization of death. Rules and practices for the use of arms in war was agreed to in the Hague conventions 30 years after the start of the second industrial age at the turn of the 20th century but were subsequently ignored in both world wars. The third seems to have given the world climate change and failure of internal controls in corporations. There is hope that change is underway but no codes of conduct or international rules with penalties have been firmly established. The fourth industrial age is underway with revolutionary new technologies capable of accelerating humanity into new ages actively being developed. The principal concern of the current age seems to be the use of data, integral to Machine Learning, is affecting individuals’ well beings and influencing society.

The conversations around data and citizen protection that have taken place have focused almost exclusively on the idea of privacy. 107 countries today have laws in place to protect data and ensure citizen privacy with varying norms that can make it difficult and costly for international companies to comply. The most successful internet companies that focus exclusively on the provision of digital services, like Google and Facebook, have chosen to monetize these services through the use of consumer data. Using these two companies as an example and taking some rough numbers, Google and Facebook would have to charge a monthly fee of US$6.74 and US$2.36 to their active users respectively in order to generate the same revenues without monetizing user access and user data for advertising. Users of these services are essentially choosing to pay these amounts, with their data, for the ability to access Google and Facebook services without paying in money. Exchanging data for services is nothing new. It began in 1994 when the internet browser Netscape introduced cookies as a way to help websites become viable commercial enterprises. The need for regulation arises because when data is used inappropriately, it hurts people – a significant risk when sharing data with third parties.

The challenge with cookies and data monetization models is that it goes against the individual empowerment ethos of the internet. Cookies and third party data sharing models have been designed in such a fashion that people do not know who they are sharing data with. So businesses and governments are creating rules on how and when data can and should be shared. There is a long standing precedent in medical sciences and in-person marketing studies that people should be compensated for the use of their personal data. The current trend is that companies are going to be held increasingly liable by governments and consumers for data and privacy breaches. The future trend will be presumed individual ownership and compensation for sharing this data with companies and subsequent third parties by codifying this sense of individual ownership of data into law. Current regulations like GDPR apply to data used to identify individuals and not data that can be anonymized in spite of how valuable it may be. Business today are tapping into the future of individuals wanting to monetize their own data, empowering them to make data-for-service transactions in a transparent way.

It would be nice to assume that societies today are more enlightened and that the stakes are lower, but many of the defence and surveillance applications of ML make it clear that the stakes are no less high. There are leading technologists all over the world developing technologies demanded by their local governments, business communities and consumers. The tyranny of the majority and the exploitation of the vulnerable have been endemic to human nature along with nobility of spirit and equality under law. The latter forces seem more powerful in societies today but only through careful vigilance against the former. As machines enhance our capabilities to create more wealth and improve the world, they also give greater capacity to systems that degrade our environments and the people who live in them.

Maybe the biggest concern related to singularity and an exponential rate of technological change is that humans will not be able to fully digest the current change before the next one begins. Digesting a change in this context means understanding the implications of discrete and complementary changes then adapting systems and behaviours to emphasize the good and minimize the bad. Regulations and codes of law exist in principal to protect rights and prohibit negative actions that are deemed to harm others. New technologies have a means of unveiling new rights and harms that previously people were either unaware of or did not exist. Rights to property of intellectual works first became law in the late 1880s after the second industrial age began. There is always a lag after technological advancements before effective conducts are understood and regulations are put into place. If the conversation on the technologies of the third age is being had today, it will be decades before the conversation for the current age concludes unless our ability to regulate new technologies increases along with our ability to create them.

Data Lakes

Comprehensive data storage began in the 1970s with companies invested in physically managing the databases and computer architecture needed to store and capture the information in a usable format. This information was captured in a structured format stored in data warehouses and data marts with a direct applicability to whatever system or service was being provided at the time of use. The advent of the cloud made it much easier for companies to maintain IT architecture at a much lower cost and has enabled the development of complicated computer systems that can be scaled easily. A large part of internet and startup mentality is to grow companies and customer bases first, then monetize them later. This confidence in the ability of teams to gain scale and then use this scale to create sustainable revenue models led to the success of many notable business-to-consumer and marketplace internet businesses. This mentality, combined with the knowledge that Machine Learning and Deep Learning models can find new impactful uses for previously unusable data, has led to companies to capture unstructured data in data lakes.

The median adoption of rate of cloud computing in 2018 was resting at 19%. A staggering number when compared with the 60% of end users that prefer cloud over on-premise systems. Current data centre usage of energy is 1% of total global energy output through sustainable and non-sustainable energy generation – a consumption that doubled over the last decade and is expected to triple or quadruple over the next. More and more organizations are shifting their IT system hosting to the cloud for the advantages of decreased IT costs, higher system reliability and ease of scalability. They are also adopting data lakes with studies proving that companies with data lakes outperform their peers in organic revenue growth. There are very real reputation challenges involved with capturing unstructured data. Customers are increasingly distrustful of organizations that do not follow a lean data model, where companies only store the data directly needed to provide a service and a data breaches can cost businesses up to 19% of their customer base.

Businesses are adopting more sophisticated IT systems that lead to stronger business outcomes. Customers are becoming increasingly sophisticated and expect the services they use to have clear data policies with rigorous security practices. The environmental cost of digital systems is increasing with the exponential growth of computer systems around the world. In general, unstructured data requires either a human or a Machine Learning system to generate business insights. Businesses began used shared computer systems in the 1960s and cloud computing in the modern context really began in 2000s. After 60 or 20 years depending on what is used as the starting point, the majority of businesses with IT systems are still not taking advantage of the benefits of cloud systems.

Tying together all of these trends brings together a familiar picture. Machine Learning systems are going to be rolled out gradually, over decades, in the business community beyond R&D projects. Critical to the effectiveness of these systems will be the adoption of data lakes to capture unstructured data to truly benefit from these ML systems while teams develop clearer data governance and the ability to process the data into a more structured format. The leading companies who drive improved data gathering and learning will be the ones who benefit. All the while, customers will be punishing companies that misuse the data they capture and the energy needs for data centres will continue to rise. New data governance and planning methods like Data Fabric are changing how companies plan for data accessibility and infrastructure investing – it’s clear that companies are developing new policies to better handle data as it’s value and fragility becomes increasingly clear.

Internet of Things

The Internet of Things, along with cloud technology, has been the dominant trend of the current industrial age. Cell phones connected with increasing remote connectivity speeds, close proximity wireless networking, intelligent remote sensor devices for weather or other conditions, wearables and many other connected devices have had profound impacts on the way people currently live. In the same way the trends of the past industrial ages are still carrying on for decades and even centuries after they began, the deployment of connected devices will continue on for decades even after we enter the next technological age enabled by more intelligent machines, a break through in space travel or another transformational change. These devices are deployed in a variety of different sectors, connecting either directly with internet systems or routed to these systems via an intermediary transmitter.

IoT devices are widely adopted and used today, creating a phenomenal set of use cases for Machine Learnings systems by establishing dynamic datasets that humans cannot effectively review and respond to in a timely fashion. The complexity and scale of IoT projects are only increasing as time goes on with teams becoming more sophisticated in their understanding of how to apply the technology. The use of Machine Learning systems has been incorporated into the IoT ecosystem in two ways: in edge devices where data latency, security and bandwidth issues are paramount, and in cloud systems where these issues are not. Laptops, smartphones, TVs, game consoles and other devices that interact with humans are not considered IoT devices because they do not interface with other machines – the key element of IoT is machine-to-machine conversations through networks shared with machine-to-human devices. To really understand what IoT is, it’s necessary to ground ourselves in the core operation of the internet.

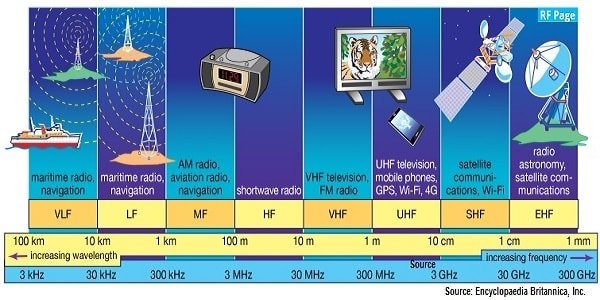

The internet is all of the mechanical devices around the world that communicate with one another through a global standard of information transfer. This information is sent through routers, switches, networks and other devices using a system of unique identifiers to ensure the right information is access by the right devices at the right time. These devices are all connected either through physically through cables or at-distance through radio frequencies. Different frequencies are used by different categories of communication protocols – for instance, Bluetooth uses a frequency between 2.400 gigahertz (GHz) and 2.483 GHz, while 5G New Radio (NR) uses sub-6 GHz and 24 GHz to 100 GHz. The Internet of Things is the increasing connectivity of devices that do not interface with a human. It refers to the growth of the hidden part of the internet where humans users do not go. Sure, we set up the devices but increasingly, it just works so people leave it alone and enjoy the benefits of this growing area of convenience. In the same way that there used to be telephone operators to connect phone calls, we are removing the roles of people to be the communication interfaces within the network of machines called the internet.

This connectivity of devices has opened new frontiers of efficient use of resources that previously we were unable to access and new negative business practices. Software updates that improve the driving systems of cars and the sophistication of smartphones can be pushed out automatically with little-to-no additional cost or human impact. The negative side of these updates is that some businesses have been accused of shortening the effective life of their hardware products to when they no longer supports the latest software, taking steps to encourage people to buy new devices and promoting needless consumption if the new features of the next wave are marginal or superficial improvements. Others have been accused of monetizing their software updates in way that misleads or otherwise forces customers to purchase upgrades to get the perceived, initially promised value. Devices like sensors that are simple sending and receiving devices with have their complicated processing functions in cloud systems can be used for long periods of time with great improvements and without replacement when those cloud systems are updated. Indeed IoT connectivity has even created a shift to eliminate human interfaces in new devices as companies take advantage of the user interfaces like smartphones and laptops already in the hands of consumers. This drive to connect all devices to the internet has created security vulnerabilities as people have shown that they can hack places and cars while in operation.

The trend towards an IoT future is clear with Smart Cities, Connected Industry, Connected Buildings, Connected Cars and Smart Energy accounting for 73% of all IoT projects in 2018. It makes sense that the areas of society where the concentration of people and computer systems are at their greatest is where IoT has the greatest possible benefit. Leading companies have already implemented IoT with ML systems to improve resource efficiency and cost savings. More powerful wireless networking tools that transmit more data faster are being unveiled with 5G as the latest advancement available in 24 international markets. These projects will continue for decades as commonplace with current devices at end of life being replaced with their IoT versions and less equipment is made with human-interfaces. The increasing number of connected devices creates an amazing opportunity for Machine Learning systems as more devices means more data coming in fast from dynamic environments that need advanced systems to react quickly through more interfaces. As ML systems become more powerful and trusted, they will manage more functions of IoT devices in a predictive manner to improve their efficiency. The development of trust in IoT ML will happen gradually with key landmark projects that lead the way along with the necessary oversight and regulation. A potential area of concern for the future of IoT is the ecological and human impact of increasing penetration of radio waves as it continues to be studied. As well, how we secure devices, the data the generate and the networks they connect to will continue to grow as an area of necessary investment.

Social engineering

A concept born out of the social sciences that has recently taken on a new context, social engineering refers to the influencing of people to act in a way that may or may not be in their best interests. Machine Learning, like robocalls, enables this influencing to happen en masse, through the internet where protections afforded to other means of influence like the TV, billboards or mailed letters are not available. As the IoT part of the internet grows and gradually becomes more secure, humans are increasingly becoming the points of vulnerability that bad actors are targeting to access secure systems or information. There have been high profile instances where millions of dollars in crypto-currencies and identities have been stolen through social engineering techniques. Many of these techniques are based off publicly available social media information and data breaches by major corporate entities only make it easier for black hat hackers to access more secure information and financial accounts.

Social engineering techniques developed over time as the techniques used to influence or persuade others to believe subjective ‘truths’ have become more understood. Governments and corporations have been lobbying the societal psyche to influence everyone to take collective action. Often it’s innocuous, where companies want us buy a new product or prefer their products over the competition. It can go much deeper with governments asking us to go to war or invest in projects to benefit the collective good. Green bonds are a recent example where governments and corporations have developed an investment instrument to get more people and companies investing in climate-saving projects. SalesForce developed an AI economist to develop a technocratic approach to some of the biggest societal challenges faced today. This team is working with teams of economists while applying Machine Learning techniques to analyze datasets and help inform policy decisions on a governmental and international scale. Money and investment management teams are deploying ML systems en masse to have objective and consistent financing decisions on which companies to support with their capital. The rise of global pandemics like the latest Coronavirus COVID-19 has increased the urgency for coordinated collective behaviours on social distancing, quarantining and contact tracing. This urgency is tempered with real concerns on how applying technology to these problems can result in an overly powerful, potentially dystopian state and eroded personal freedoms.

Machines and humans are better at certain distinct operations and create a symbiosis that is greater than either part separately. China is blazing a trail in the use of machines for social engineering through AI judges and a social scoring system. Western nations have used credit scores for a long time to establish a maintain a shared record of the financial reputation of the people and businesses applying for credit. The institutions that maintain and check credit scores have looked to broaden the use of these scores to background checks for employment and insurance applications. These scores have enormous impacts on peoples lives and if calculated erroneously, give rise to an opaque system with unfair consequences. Computers systems to expedite judicial decisions are being tested in different jurisdictions and will likely roll out globally with the aim of enabling faster, more consistent decisions with the ability of human judges to overrule in exceptional circumstances.

Robot social engineering through economic, legal and other systems has the potential to create more fair and equal systems to benefit individual, human actors – just like in sports and competitions, it’s easier to know how to consistently win when the rules are understood and applied fairly. There is an insidious side to these systems. Robert Cialdini is a leading example of a researched studying the psychological methods of persuasion that are most effective in influencing individual behaviour. It may seem difficult to believe and in spite of the best efforts in email spam filters developed on ML systems, people are still falling for Nigerian prince scams. The nature of influencing has not changed and neither have the motivations of people seeking to influence others. If anything, robot systems will help amplify the scale and impact of human behaviour on one another for good and ill.

Using ML and robot systems to influence behaviour gives people the ability to understand and influence societies on a national and international level with people often unaware of how they are being influenced. It was a turning point in internet advertising and marketing when people became aware of how cookies were being used to track their internet browsing behaviour, customizing individual adds based on that behaviour. The use of social media to influence political outcomes through voter-specific targeting has changed the course of elections and referendums, arguably through subversive means. Institutions like the free press, policing agencies, governments and academic programs increasingly have revealed agendas that may or may not be aligned with individuals values. What is clear, is that humans will use these systems to influence people on a growing basis – it will be our responsibility to know who is acting to engineer societies and what their interests are. If there was ever a time to influence new behaviours on a societal level, it is now – the rising impact of pandemics and climate change are urgent challenges that require collective action to foster new ways of living in this world.

Open-source code

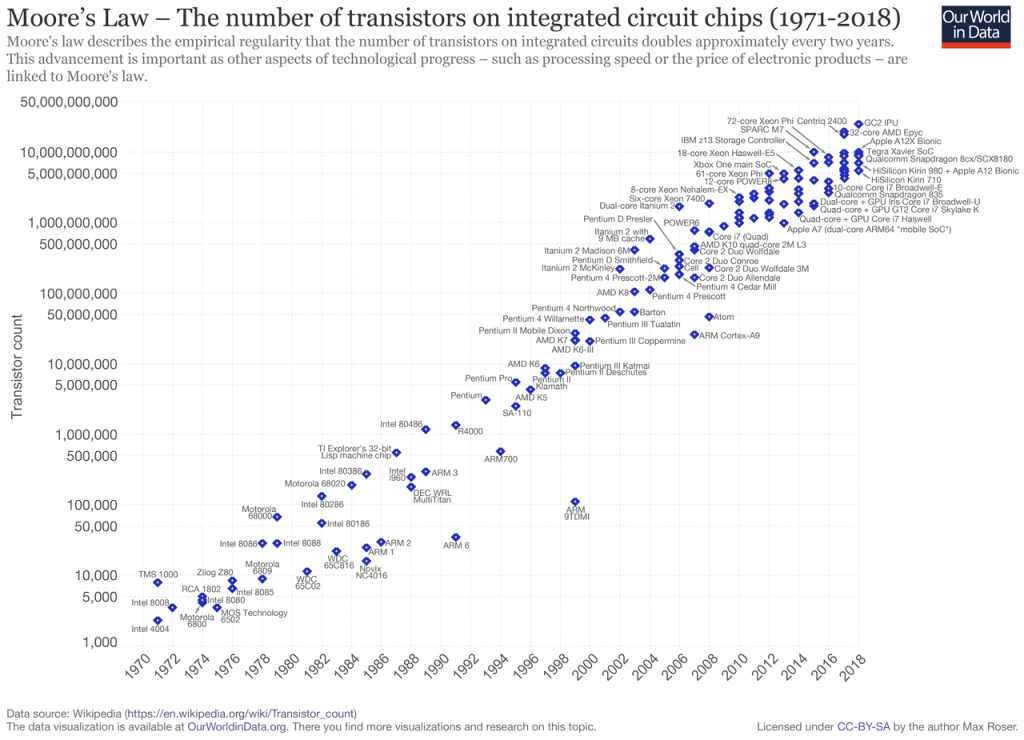

The pace of technological change is increasing, at least according to Moore’s Law. The most commonly sighted example of the increasing speed of technological change is the doubling of computer processing speeds every 18 to 24 months first identified by Gordon Moore in 1965. This rate of exponential change in computing power has driven other major technological advancements like personal computing and smart phones as devices became more portable and accessible. Moore’s Law, however, is not without limit. Many leading publications are now viewing this prediction as a self-fulfilling prophesy as the development cost to create new chips that double processing power increases, creating diminishing returns for this technology. There is no need to worry as the integrated circuit of transistors on silicon chips will be replaced by the next standard, likely gallium nitride-based.

Indeed, there is a regular displacement of technologies and systems as we trend towards the solutions that deliver the greatest benefit and discard the ones that do not. When the 2G telecommunication standard came out, 1G and the devices not compatible with 2G were discarded. This switch to the benefits of 2G, specifically being able to access digital services AKA data, reflected the iPhone and smartphone innovation – a change to which people rapidly adapted. The inevitability of newer and better technologies being invented that replace the older is just as apparent in computer systems. With Apple, Microsoft, Google and other tech titans constantly releasing updates to use the new hardware advancements that drive innovation leadership, the link between software systems and hardware is undeniable.

Going further, the current industrial revolution has been powered by improving telecommunication infrastructure and exponential rapid increase in computer processing power. The challenge for businesses operating in this environment is the fight to remain relevant to current capabilities and customer demand. Innovator’s Dilemma is a seminal work that explores how and why incumbent organizations with all of their resources and expertise are disrupted by new organizations better suited to manage the evolutions in customer demand. Open source business models is a strategic option available to software companies who license their code in a way that mitigates some of this disruptive potential. These companies allow other teams to access the source code of specific software products to make changes, recommendations and build business based off newly formed code branches under varying degrees of commercial limitations. The hope being that if the new companies or projects are allowed to use pre-existing code to create new systems, they will use the available code rather than building everything from scratch.

Google’s release of Android was a landmark moment for Microsoft. It marked a huge shift away from the Windows operating systems (OS) for a new device standard in a market where Microsoft would have traditionally been a fast follower. Google cornered the OS market for non-Apple smartphones by open sourcing the Android OS code. This gave phone manufacturers a zero cost, high quality, customizable option for their phone OS that connected to an ecosystem of applications, demanded by their customers, where Google makes their money. This practice of making money on add-on apps to an open-source codebase follows the open-core model, one of many potential open-source business models. Leading software providers like Cloudera, MongoDB and RedHat all employ open-source business models to varying degrees and have all been quite successful. Open source code through projects like TensorFlow, Apache Hadoop and Apache Spark have already been shaping Machine Learning projects for some time. As existing software companies strive to remain relevant, the impact of open source code and business models will continue to be impactful. Blockchain and digital-first currency are one of the great innovations to come from the open-source community. They represent the possibility of decentralizing access to data and creating secure, unique value transfer systems on the internet that mimic cash changing hands in real-life, though these technological innovations are held back by the complex social systems that currently govern the financial transfer systems we use today.

Space Travel

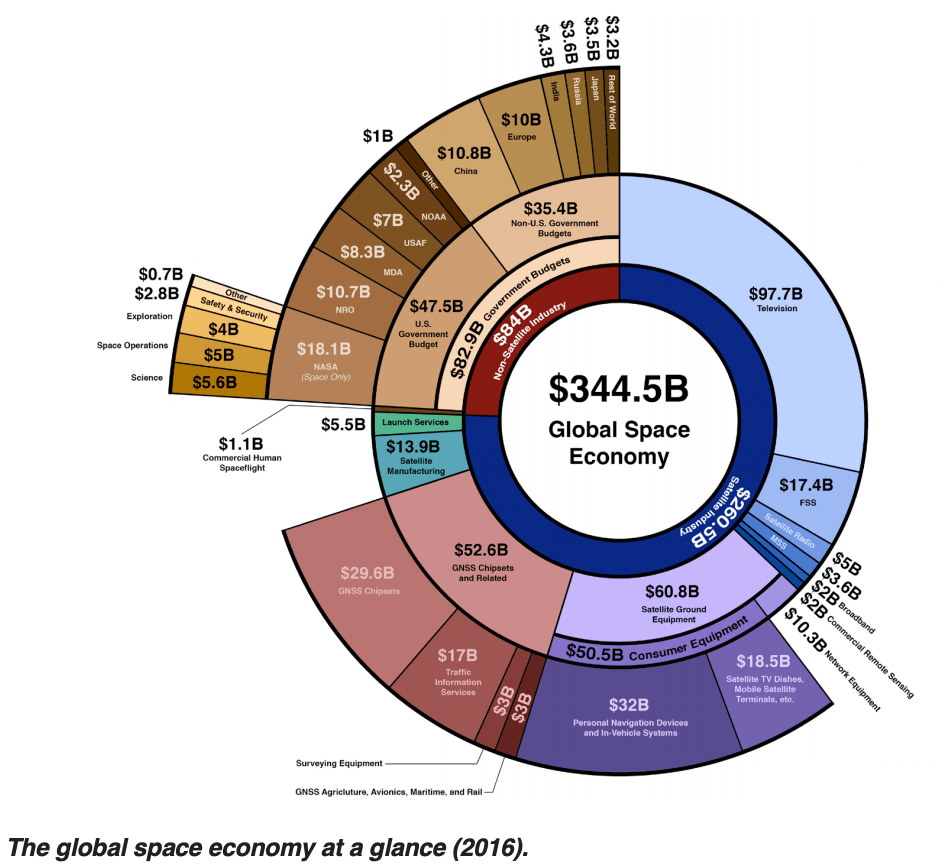

The privatization of space and the possibilities therein is a tremendous opportunity for humanity. NASA, ISRO, Roscosmos, ESA and CNSA have all made huge progress with advanced technological capabilities in transport rockets, computer systems and human habitats to make accessible the most unliveable environment for humans. On a grand scale, space exploration has dominated national agendas as a means of demonstrating terrestrial power and achieving leverage over chosen rivals while exploring space. On a commercial scale, tremendous commercial opportunities in telecommunications and ground surveillance have been made possible through satellites that orbit the earth. These two markets have been divided into the Non-Satellite and Satellite Industry, respectively with a collective global market value of $344.5 B in 2016.

SpaceX was able to enter the space economy by providing lower cost launches for satellites in earth orbit. The company has since been able to expand their services to include refuelling missions to the International Space Station (ISS) and soon, rotation of crew members on human-safe rocket transport. The ISS itself is a tremendous commercial opportunity for companies looking to do advanced research and development projects in a zero gravity environment and the vacuum of space – NASA is advancing an agenda to introduce more commercial ownership of the station to free up more budget for space exploration. Space exploration opens up two key areas of opportunity for the benefit of humanity – resource extraction from asteroids and colonization of the solar system. The call to space comes with huge upfront costs with unclear payoff periods. Even now companies like SpaceX come under criticism for using expertise NASA developed to complete missions that have already been proven with NASA technologies, if not made economical. The role of government funded agencies like NASA is crucial to developing the framework for the commercialization of space. Treaties that establish agreements on how to handle space debris and junk that affects orbiting infrastructure along with property rights on mined resources from space are still being worked on and will give a much needed legal framework to off-planet interactions.

The space race to the moon advanced a wide variety of technologies and pushed the developed of integrated computer circuits. It stands to reason that if resources were marshalled again to develop sustainable habitats in space and more advanced transport, construction or mining systems, another wave of innovation would follow from a directed focus. Given the harsh environment and vast transmission distances needed in space systems, more intelligent machine systems can address these challenges with solutions to a wide variety of problems. Advanced machine learning techniques like deep learning can and are being applied to develop next generational sensor, radiation resistant computer systems, mapping, signal transmission, self-landing rocket and self-driving rover capabilities needed for more cost-effective and far-reaching space programs. Just as numerous technologies and computer systems developed for space exploration became commercial successes for earthbound markets, this is a pattern that will continue into the future and likely have a tremendous impact on the development of new, more intelligent machines.

Wrapping up

There are other long-term trends that will affect the application of Machine Learning systems, like climate change and new data-sharing methods from decentralized technologies like blockchain. These trends will likely open areas of opportunity for new business models and human problems to be solved rather than aspects that will propel or shape the evolution of the intelligent robot systems like the trends mentioned above. Machine Learning is a tool that will continue to be developed around hardware components and software techniques that can be effectively repurposed to address other problems based on the similarity of the problem from an engineering standpoint. The beauty of Deep Learning systems is that they enable decision-making problems to be abstracted to the point where a system can be applied broadly without needing much additional investment for a new problem – similar to how a high-level athlete can usually be competitive in every sport, at least on an amateur-level. We’re still in the early years of applying Deep Learning and Machine Learning has given us amazing efficiencies in highly specialized systems with structured inputs like search or image recognition. It’ll be amazing to see what happens when we get a Deep Learning Usain Bolt and how people continue to apply Machine Learning to some of the greatest challenges facing people today, and tomorrow.